Dr. Farshid Pirahansiah

- Dr. Farshid Pirahansiah CV

- portfolio,projects,Solutions

- Innovations

My portfolio

Explore my Innovations, Projects, and Solutions to see how I can contribute to your startup’s growth and help solve key challenges with innovative approaches.

- List of My Impact Portfolio

CUDA

Leveraging CUDA for High-Performance GPU Computing with PyCUDA, Numba

- Innovations

- Numba JIT Computer Vision, ML, DL, LLM

- This file provides a detailed tutorial on how to use the @jit(nopython=True) decorator from the Numba library to optimize Python code for better performance. It explains how Numba compiles Python functions into machine code, improving execution speed for numerical operations and loops. The tutorial includes examples of summing squares, factorial computation, and matrix multiplication.

- PyCUDA Kernel Explanation: Computer Vision, ML, DL, LLM

- This file explains how PyCUDA enables the execution of CUDA kernels written in C/C++ directly from Python. It details how PyCUDA compiles the kernel code at runtime, allocates memory on the GPU, and executes the kernels. The explanation includes an example of running an element-wise addition kernel on the GPU, demonstrating the process from writing C kernels to retrieving the results in Python.

LLM

🚀 Orchestrating AI Agents 🌐

🚀 Orchestrating AI Agents 🌐

- This file explains how PyCUDA enables the execution of CUDA kernels written in C/C++ directly from Python. It details how PyCUDA compiles the kernel code at runtime, allocates memory on the GPU, and executes the kernels. The explanation includes an example of running an element-wise addition kernel on the GPU, demonstrating the process from writing C kernels to retrieving the results in Python.

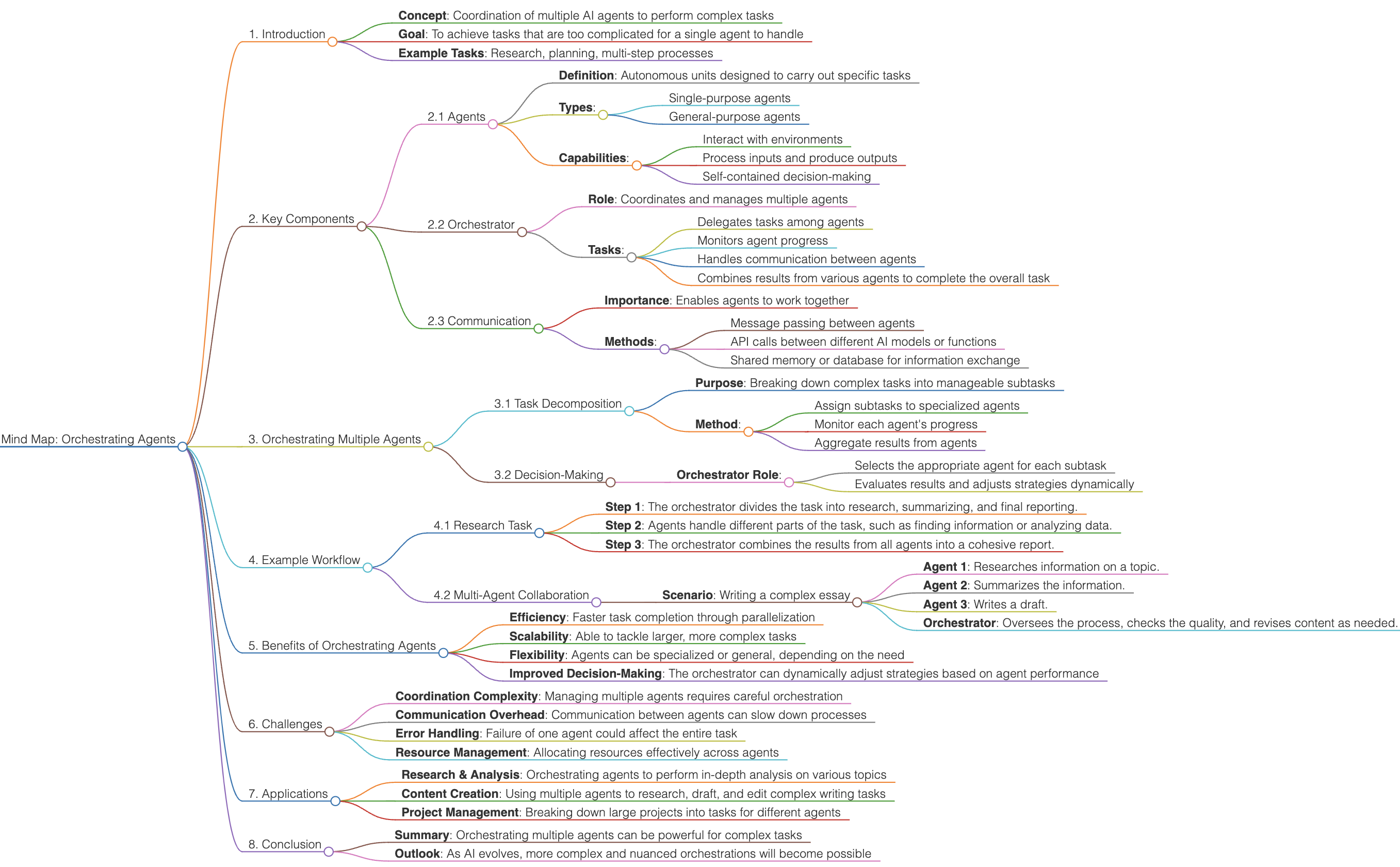

Imagine coordinating multiple AI agents to tackle complex tasks like research, planning, & more! By breaking tasks into subtasks, agents work together efficiently. 🤖🔗

Explore the future of multi-agent collaboration: #AI #MachineLearning #Automation

<p><img src="/farshid/content/Mind_Map_Orchestrating_Agents.png" alt="Mind Map Orchestrating Agents" style="max-width: 100%; height: auto;" /></p>

Mind Map: Orchestrating Agents

1. Introduction

- Concept: Coordination of multiple AI agents to perform complex tasks

- Goal: To achieve tasks that are too complicated for a single agent to handle

- Example Tasks: Research, planning, multi-step processes

2. Key Components

2.1 Agents

- Definition: Autonomous units designed to carry out specific tasks

- Types:

- Single-purpose agents

- General-purpose agents

- Capabilities:

- Interact with environments

- Process inputs and produce outputs

- Self-contained decision-making

2.2 Orchestrator

- Role: Coordinates and manages multiple agents

- Tasks:

- Delegates tasks among agents

- Monitors agent progress

- Handles communication between agents

- Combines results from various agents to complete the overall task

2.3 Communication

- Importance: Enables agents to work together

- Methods:

- Message passing between agents

- API calls between different AI models or functions

- Shared memory or database for information exchange

3. Orchestrating Multiple Agents

3.1 Task Decomposition

- Purpose: Breaking down complex tasks into manageable subtasks

- Method:

- Assign subtasks to specialized agents

- Monitor each agent’s progress

- Aggregate results from agents

3.2 Decision-Making

- Orchestrator Role:

- Selects the appropriate agent for each subtask

- Evaluates results and adjusts strategies dynamically

4. Example Workflow

4.1 Research Task

- Step 1: The orchestrator divides the task into research, summarizing, and final reporting.

- Step 2: Agents handle different parts of the task, such as finding information or analyzing data.

- Step 3: The orchestrator combines the results from all agents into a cohesive report.

4.2 Multi-Agent Collaboration

- Scenario: Writing a complex essay

- Agent 1: Researches information on a topic.

- Agent 2: Summarizes the information.

- Agent 3: Writes a draft.

- Orchestrator: Oversees the process, checks the quality, and revises content as needed.

5. Benefits of Orchestrating Agents

- Efficiency: Faster task completion through parallelization

- Scalability: Able to tackle larger, more complex tasks

- Flexibility: Agents can be specialized or general, depending on the need

- Improved Decision-Making: The orchestrator can dynamically adjust strategies based on agent performance

6. Challenges

- Coordination Complexity: Managing multiple agents requires careful orchestration

- Communication Overhead: Communication between agents can slow down processes

- Error Handling: Failure of one agent could affect the entire task

- Resource Management: Allocating resources effectively across agents

7. Applications

- Research & Analysis: Orchestrating agents to perform in-depth analysis on various topics

- Content Creation: Using multiple agents to research, draft, and edit complex writing tasks

- Project Management: Breaking down large projects into tasks for different agents

8. Conclusion

- Summary: Orchestrating multiple agents can be powerful for complex tasks

- Outlook: As AI evolves, more complex and nuanced orchestrations will become possible

transform career in 2025 become developer in 2025 new era of developer AI engineer Building Production-Ready AI Agents

multi agent programming for your application. lets do the all works by agent . you have multiple agent and each do the job related to your application like database agent, process agent, front end/back end agent, ux ui agent, gui agent, … AI Software Engineer - Full Stack

https://github.com/SWE-agent/SWE-agent https://github.com/e2b-dev/awesome-ai-agents https://github.com/kyrolabs/awesome-agents

https://github.com/phidatahq/phidata

-

Build multi-modal Agents with memory, knowledge, tools and reasoning.

-

https://github.com/coolnj4/AI-Agents-and-Software-Development AI Assisted Software Development using AI Agents This project is just a Proof of Concept not for production use

Overview

This project is developed to assist software developers in developing the softwares especially the coding part where several AI Agents are employed as the assitant for the user to perform the task as per the instructions sent by their user. These AI Agents are able to understand the task, breakdown the task into subtasks & asign the subtasks to the the AI Agents which are employed under the primary AI Agent.

Advantages of Our Approach

Major problem with LLM is that they hallucinate a lot on a large complex task. So our approach solves this by making multiple instances of an LLM in the form of AI Agents which have their own memory & their own tools & which are specialised for their own specific tasks. These agents will work together to solve the large complex problem same as we work in a real office environment by considering the positive & negative points for each approach/solution & making an informed decision to solve the specific problem.

This helps in providing the capability of reasoning to the LLMs

Modules

The project has 3 primary AI Agents in 3 different modules

Manager - The one who tends to understand the task & make a plan out of it Developer - The one who has the ability to develop the code as per the instructions given by the user Tester - The one who can generate test cases corresponding to the code sent by the user Server - A server is also created to allow the user of these 3 agents to communicate with another agent’s user for now only manager can communicate with both tester & developer & developer can communicate with manager so that manager always know what is happening in the development.

Installation

Clone this git repository git clone

https://github.com/TheAgentCompany/TheAgentCompany

The paper titled “TheAgentCompany: Benchmarking LLM Agents on Consequential Real World Tasks” introduces an extensible benchmark designed to evaluate AI agents that perform tasks akin to those of digital workers, such as web browsing, coding, executing programs, and collaborating with colleagues.

The authors developed a self-contained environment that emulates a small software company, complete with internal websites and data, to assess the performance of these agents. They tested baseline agents powered by both closed API-based and open-weight language models. The findings indicate that the most competitive agent could autonomously complete 24% of the tasks. This suggests that while current AI agents can handle simpler tasks independently, more complex, long-term tasks remain challenging for existing systems.

- Diverse task roles:

- Software Engineer

- Product Manager

- Data Scientist

- Human Resource

- Financial Staff

- Administrator

- Diverse data types:

- Coding tasks

- Conversational tasks

- Mathematical reasoning

- Image processing

- Text comprehension

- Multiple Agent Interaction

- Comprehensive scoring system

- Result-based evaluation (primary)

- Subcheckpoints checking (secondary)

- Multiple evaluation methods:

- Deterministic evaluators

- LLM-based evaluators

- Simple one-command operations:

- Complete environment setup in minutes

- Quick system reset in minutes when needed

- Extensible benchmark framework

- Add new tasks/evaluators/subcheckpoints in minutes

• MetaGPT: Described as “The Multi-Agent Framework: First AI Software Company, Towards Natural Language Programming,” MetaGPT aims to structure AI agents to function collaboratively, emulating a software company. This approach enhances the efficiency and scalability of AI-driven software development.

• AGiXT: An advanced AI automation platform designed to enhance AI instruction management and task execution across various providers. It incorporates features like adaptive memory, smart instruct, and a versatile plugin system, pushing the boundaries of AI technology towards achieving Artificial General Intelligence (AGI).

• AgentVerse: An open-source Python framework for deploying multiple LLM-based agents in various applications. It offers task-solving and simulation frameworks for collaborative task accomplishment and behavior observation among agents.

• AgentGPT: This platform allows users to assemble, configure, and deploy autonomous AI agents directly in their browsers. It provides an interactive interface for managing AI agents tailored to specific tasks.

• LLM-Agent: A framework for building agents powered by large language models, focusing on autonomy and task completion. It facilitates the creation of AI agents capable of performing complex tasks with minimal human intervention.  ---

https://github.com/geekan/MetaGPT?tab=readme-ov-file

- Data Interpreter: An LLM Agent For Data Science

- Meta{GPT}: Meta Programming for A Multi-Agent Collaborative Framework

- The paper titled “AFlow: Automating Agentic Workflow Generation” introduces AFlow, an automated framework designed to generate and optimize agentic workflows for large language models (LLMs). Agentic workflows are structured sequences of LLM invocations that follow detailed instructions to solve complex tasks across various domains. Traditionally, constructing these workflows has required significant human effort, limiting their scalability and adaptability.

Key Contributions of AFlow: • Automated Workflow Generation: AFlow reformulates workflow optimization as a search problem over code-represented workflows, where LLM-invoking nodes are connected by edges. This approach enables the automated generation and refinement of workflows without the need for manual setup. • Monte Carlo Tree Search (MCTS): The framework employs MCTS to efficiently explore the vast space of potential workflows. It iteratively refines workflows through code modification, tree-structured experience, and execution feedback, leading to the discovery of effective solutions. • Empirical Evaluation: AFlow’s efficacy is demonstrated through evaluations across six benchmark datasets: HumanEval, MBPP, MATH, GSM8K, HotPotQA, and DROP. The results show an average improvement of 5.7% over state-of-the-art baselines. Notably, AFlow enables smaller models to outperform GPT-4 on specific tasks at 4.55% of its inference cost in dollars.

By automating the generation and optimization of agentic workflows, AFlow reduces the reliance on human intervention, enhancing the scalability and generalizability of LLMs across diverse tasks and domains. The code for AFlow is available at https://github.com/geekan/MetaGPT.

Silent Tsunami: The Greatest Wealth Transfer in History is On Its Way!

A brief overview of the most critical event that has been unfolding over the past few months and will officially challenge humanity in the next few years, replacing over 70% of administrative/industrial jobs globally.

Why Will Most Jobs Become Obsolete?

A fundamental event is taking shape. A phenomenon that, within a few years, will result in the greatest wealth transfer in human history. Stunning forecasts by McKinsey and Goldman Sachs predict a future where AI “agents” will take over 70% of administrative jobs and add $7 trillion to the global economy!

This silent tsunami will push many current jobs into oblivion. But are we ready to face this massive wave?

The AI-Powered Workplace of the Future

In the coming years, you’ll walk into your office to find AI agents managing all aspects of the workplace—from customer service and data analysis to project management. Everything will be automated, but this is just the initial layer!

These agents are not merely chatbots or simple automation tools. They are independent systems capable of understanding their environment and performing tasks entirely without human intervention.

Key Abilities of AI Agents

- Task Execution:

- Respond to emails.

- Schedule meetings.

- Write reports.

- Manage projects.

- Analyze data.

-

Simultaneous Multi-tasking: They perform these tasks simultaneously at unbelievable speeds.

- Decision-Making:

- Analyze available data.

- Weigh options.

- Make informed decisions to achieve objectives.

- Context Awareness:

- Interpret ongoing conversations.

- Understand intent, dependencies, and various contexts.

Continuous Learning and Improvement

AI systems are constantly learning and improving through interactions. Imagine this scenario:

- A customer emails about a product issue.

- One agent reads and understands the issue.

- Another checks inventory and shipping data.

- A third offers a solution.

- A fourth coordinates the delivery.

All this happens within seconds, without any human intervention! This scenario is no longer science fiction. For example, Amazon’s recommendation systems, powered by AI agents, generate 35% of its revenue and reduce support tickets by 65%.

Beyond Automation: Human Capital Transformation

The biggest impact of this revolution is not just automation but the transformation of human capital.

As AI automates routine tasks, the real value will lie in:

- Creative problem-solving.

- Building relationships.

- Strategic planning.

In this new era, value will shift toward those with superior ideas and creativity. In just a few years, entirely new roles will emerge, such as:

- AI Supervisors.

- Creative AI Managers.

- Human-AI Collaboration Specialists.

- Digital Workforce Managers.

Unequal Transition

The transition will not be the same for everyone. Developed economies are predicted to benefit 20-25% more from the economic advantages of this revolution. The gap between AI-ready organizations and lagging ones will increase significantly.

Preparing for the Future

If you’re in a traditional administrative role, start preparing now:

- Learn to collaborate with AI systems.

- Develop unique human skills.

- Focus on creative and strategic thinking.

Embracing AI While Maintaining Human Advantage

The future belongs to those who can harness the power of AI while preserving their human edge. The AI revolution will not only change how we work but also how we build influence and trust.

When AI handles executive tasks, human connections and stories will hold even more value. When AI can develop everything flawlessly and optimize operations, the question becomes:

- What makes someone want to work with you?

- Why would they invest in you or buy from you?

The answers to these questions will define the next chapter of humanity’s evolution.

LLM Multi-Agent Swarm Architecture

Below is a curated overview of resources, ideas, and references for building LLM-based Multi-Agent Swarm Architectures. The focus is on how existing large language models (like Meta’s Llama, Mistral AI’s Mistral, Anthropic’s Claude, etc.) and related open-source frameworks can support multi-agent, swarm, or distributed intelligence systems.

1. Overview of Multi-Agent Swarm Architectures

A Multi-Agent Swarm Architecture entails multiple (semi)autonomous agents cooperating, often in a decentralized manner, to solve complex tasks:

- Decentralized Decision-Making – Agents act independently while coordinating for a common goal.

- Emergent Behavior – Desired complex global outcomes arise from simple local interactions.

- Adaptability – The system reconfigures itself automatically based on context (e.g., load balancing, resource constraints).

When combined with LLMs, each agent can be an instance of (or use) a language model for reasoning and planning. This can lead to emergent collaboration for tasks like:

- Code generation

- Large-scale data analysis

- Multi-modal data processing

2. Relevant LLMs and Ecosystems

2.1 Meta’s Llama (and Potential Llama 3)

- Llama 2 is open-source, available in multiple parameter sizes, and can be fine-tuned or quantized.

- Llama 3 (anticipated future release) may offer improvements in performance and efficiency.

- Multi-Agent Use:

2.2 Mistral AI’s Mistral

- Mistral 7B is an open-source model focusing on efficiency and strong performance for its size.

- Community rumors mention “Mixtral” as an experimental mixture-of-experts approach (not officially confirmed).

- Multi-Agent Use:

- Multiple Mistral models can run in parallel for swarm-like behavior thanks to the relatively small size.

- Check out Mistral on Hugging Face.

2.3 Anthropic’s Claude

- Claude is a commercial, API-accessible LLM by Anthropic, known for safety and large context windows.

- Multi-Agent Use:

- Although closed-source, you can integrate Claude as one or more agents within an orchestrator (e.g., Python-based, LangChain, etc.).

3. Multi-Agent / Swarm Frameworks & Techniques

3.1 LangChain Agents

- LangChain provides “agents” that can call upon LLMs, tools, or even other agents.

- You can create a swarm of agents by spawning multiple specialized instances (e.g., math reasoning, coding, retrieval).

- Key Features:

- Built-in memory modules for storing partial results.

- Straightforward integration with many tools (web search, local DBs, code execution).

3.2 Ray

- Ray is a framework for scalable distributed computing in Python.

- Use Case: Run each LLM-based or logic-based agent as a Ray worker for concurrency and elasticity.

- Ray AIR includes libraries for large-scale ML training, RL, and microservices.

3.3 PettingZoo for Multi-Agent Reinforcement Learning

- PettingZoo is a library for multi-agent reinforcement learning (MARL).

- While not directly LLM-based, it can serve as an environment wrapper for RL tasks or be adapted to integrate language models as part of agent decision-making.

3.4 Auto-GPT / BabyAGI / AgentGPT

- These popular “autonomous agent” projects on GitHub demonstrate how LLMs can iterate on goals and tasks:

- Auto-GPT: Agents that plan and chain tasks autonomously.

- BabyAGI: Similar concept, focusing on scheduling and task generation loops.

- AgentGPT: A web-based UI for Auto-GPT–like agent orchestration.

- In multi-agent setups, you can launch multiple specialized LLM agents simultaneously.

4. Example Architectures & Code References

Below are some high-level suggestions for building a multi-agent LLM swarm:

- LangChain + Ray

- Use LangChain to manage agent logic (prompting, memory, tool usage).

- Orchestrate concurrency and scale with Ray.

- Each agent (LLM instance) is a Ray actor receiving tasks from a “controller” actor.

- Custom Docker Swarm / Kubernetes

- Spin up multiple LLM inference microservices in containers.

- A central job scheduler assigns tasks to each container.

- Agents coordinate via message queues (e.g., Kafka, RabbitMQ).

- MARL with LLM Observers

- Use RLlib (part of Ray) for multi-agent RL training.

- Integrate LLMs as “observers” or “policy modules” to interpret environment states or generate actions.

Relevant GitHub Repositories:

5. Further Reading and Experiments

- Papers on Multi-Agent Emergence:

- Emergent Tool Use from Multi-Agent Autocurricula (OpenAI)

- Learning to Collaborate in Multi-Agent Games (DeepMind)

- Swarm Robotics Literature:

- Concepts from swarm robotics (decentralized coordination, local communication) translate well to software-based LLM agents.

- System Diagram:

- Outline how agents communicate (REST APIs, shared memory, function calls).

- Assign each agent a specific domain (e.g., code generation, summarization, data ingestion) for best results.

- Experiment with Smaller Models:

- Mistral 7B or Llama 2 (7B) are easier to deploy in parallel than 70B+ models.

Conclusion

Building Multi-Agent Swarm Architectures with LLMs is an exciting new frontier. While frameworks like LangChain and Ray provide the building blocks for distributed agent orchestration, projects like Auto-GPT and BabyAGI illustrate how LLMs can autonomously generate and complete tasks. Combine these resources with open-source LLMs like Llama 2 or Mistral, or commercial offerings like Claude, to create systems that scale, collaborate, and adapt. Explore the references above for the latest code, techniques, and research insights.

The 6-Minute Journal is a structured, guided journaling approach designed to help individuals reflect on their day, foster positivity, and develop a habit of gratitude and self-awareness. It focuses on spending just 6 minutes daily—3 minutes in the morning and 3 minutes in the evening—answering prompts to improve mental clarity and well-being.

Key Features 1. Morning Routine (3 Minutes) • Gratitude Practice: Write 3 things you’re grateful for. • Daily Intentions: Define what would make today great. • Affirmations: Write positive affirmations or self-encouraging statements. 2. Evening Routine (3 Minutes) • Daily Highlights: Reflect on 3 amazing things that happened during the day. • Improvements: Write about how you could have made the day even better.

Benefits • Boosts positivity by focusing on gratitude. • Increases mindfulness through reflection and intention-setting. • Improves mental health by promoting a sense of accomplishment. • Develops a growth mindset by identifying areas for improvement.

Why 6 Minutes?

The time commitment is minimal, making it easier to build a consistent journaling habit, even for busy individuals. It’s a practical way to incorporate mindfulness and reflection into daily life.